A data scraping workshop at GIJC23. Image: Smaranda Tolosano for GIJN

No Coding Required: A Step-by-Step Guide to Scraping Websites With Data Miner

Read this article in

Knowing where to look for data — and how to access it — should be a priority for investigative journalists. Effective use of data can not only improve the overall quality of an investigation, but increase its public service value.

Over the last 20 years, the amount of data available has grown at an unprecedented rate. According to the International Data Corporation (IDC), by 2025 the collective sum of the world’s data will reach 175 zettabytes (one zettabyte is one trillion gigabytes; as IDC puts it, if one could store the 2025 datasphere onto DVDs, the resulting line of DVDs would encircle the Earth 222 times).

Some estimates claim that Google, Facebook, Microsoft, and Amazon alone store at least 1,200 petabytes (one petabyte = one million gigabytes) of data between them. Investigative and data journalists are using more quantitative, qualitative, and categorical data than ever before — but obtaining good data is still a challenge.

Access to, or finding, structured data — defined as data in a clearly defined, standardized format ready for analysis — from the oceans of bad or incomplete data (including false data, dirty, faulty, or “rogue” data, fake data, scattered data, and unclear data) is still difficult, no matter the field. Part of the solution to this problem is increasing data literacy: we need to understand how data is collected, cleaned, verified, analyzed, and visualized, because it is an interconnected process. For journalists, data literacy is crucial.

In data journalism, as with any kind of journalistic practice, we look for ways to access all kinds of data, such as from leaks, from thousands of pdf files, or from indexes recorded on websites — organized or not. Some of these are easy to access, while others require technology to access, which takes time.

However, there are tools and methods that make it both enjoyable and simple — such as scraping data from websites. Scraping in this manner means using computer programs or software to extract or copy specific data from websites. This process can be used to collect or analyze the data, and it is faster and more efficient than acquiring data manually.

The benefits of data scraping for journalists include:

- Speed and scope: Data scraping allows journalists to gather information quickly and efficiently. Pulling data from a variety of sources across the internet gives you a broader perspective, and helps you base your stories on a more solid foundation.

- Verification: Data scraping can help journalists in the verification process. You can compare data to check information on the web and spot contradictions, which helps verify information and increase its credibility.

- Uncovering trends: Data scraping can be used to uncover patterns related to a particular topic or event. By analyzing large datasets, you can, for example, understand trends in social media or public opinion and integrate this information into your news.

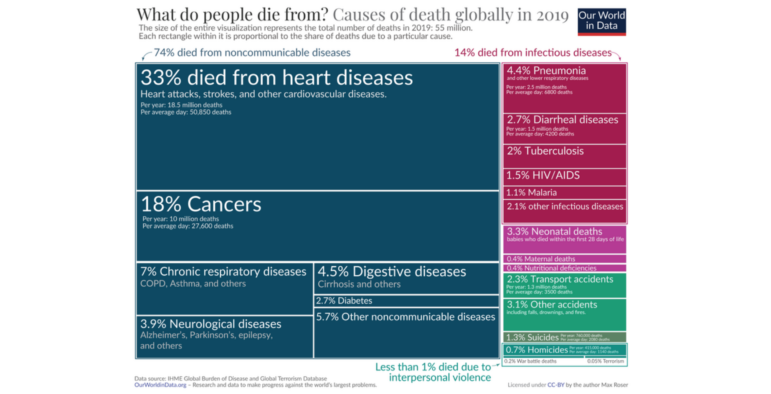

- Data visualization: Visualizing the data collected by data scraping helps journalists present their stories more effectively. By using graphs, charts, and interactive visuals, you can make the data more understandable and give readers a better understanding of the topic.

- Enabling in-depth investigation: Data scraping allows journalists to conduct more in-depth research. By analyzing large datasets, for example, in financial data, you can gain a deeper understanding of company operations or government policies.

- Increasing news value: Data scraping can lead to newsworthy stories. Statistics, trends, demographics, or other data can make your stories more engaging and compelling.

Data Miner is a free data extraction tool and browser extension that enables users to scrape web pages and collect secure data quickly. It automatically collects data from web pages and saves it in Excel, CSV, or JSON formats.

However, keep in mind that collecting data from websites in bulk may violate their terms of use, or the law. It’s important to read the website’s terms of use carefully before using a browser extension or plugin, and to act in accordance with all legal rules and regulations. You should also review the terms of service of the extension you are using.

GIJN Turkish editor Pınar Dağ, the author of this story, gives a presentation on using Data Miner at GIJC23 in Gothenburg. Image: Smaranda Tolosano for GIJN

How Journalists Can Use Data Miner

Here are the steps for scraping a website with the Data Miner browser extension.

1. Install the Data Miner add-on to your browser. The add-on is generally available for browsers such as Chrome or Firefox. Find and install the Data Miner add-on from your browser’s add-on store.

- Open the target website. Open the website from which you want to scrape data in your browser, and launch its extension — or in other words, find Data Miner in the extension/plugins menu in your browser and open it. The extension is usually located in the top right corner of your browser.

3. Create a new task/recipe for web scraping. The Data Miner extension has a “My Recipes” option. Click this option to create a new web scraping task. You will be presented with a command screen to continue the mining process.

4. Set options for scraping the website: Data Miner has various options and settings for scraping a website. For example, you can specify which data you want to scrape, and you can set automatic actions, such as page navigation or form filling.

- Start scraping the website. Once you have finalized the settings, you can start the data scraping by clicking the “Scrape” button in the Data Miner extension dashboard. The extension will crawl the website and collect the data you have specified. (You can also watch the process in this short video.)

- Save or export the data. You can usually save your scraped data as a CSV file or Excel spreadsheet. You can also copy the scraping screen using the Clipboard feature — a convenient and time-saving feature. If your scraped data is more than 10,000 rows, it will be downloaded as two separate files.

By following these steps, you can scrape one or multiple websites with Data Miner, and you can run any of the 60,000-plus data scraping rules, or create your own customized data scraping method to get only the data you need from a web page, because it is possible to create single page or multi-page automatic scraping.

You can automate scraping and can run batches of scraping jobs, based on a list of website URLs. Plus, you can use 50,000 free, pre-made queries for more than 15,000 popular websites. You can also crawl URLs, paginate them, and scrape a single page from a single location — no coding required.

Using the extension also has the following advantages.

- It helps you use it safely and securely: It behaves as if you are clicking on the page yourself in your own browser.

- It helps you scrape without worry: It’s not a bot, so you won’t get blocked when you make a query.

- It keeps your data private: The add-on does not sell or share your data.

Pınar Dağ is the editor of GIJN Turkish and a lecturer at Kadir Has University. She is the co-founder of the Data Literacy Association, Data Journalism Platform Turkey, and DağMedya. She works on data literacy, open data, data visualization, and data journalism, and is on the jury of the Sigma Data Journalism Awards.

Pınar Dağ is the editor of GIJN Turkish and a lecturer at Kadir Has University. She is the co-founder of the Data Literacy Association, Data Journalism Platform Turkey, and DağMedya. She works on data literacy, open data, data visualization, and data journalism, and is on the jury of the Sigma Data Journalism Awards.