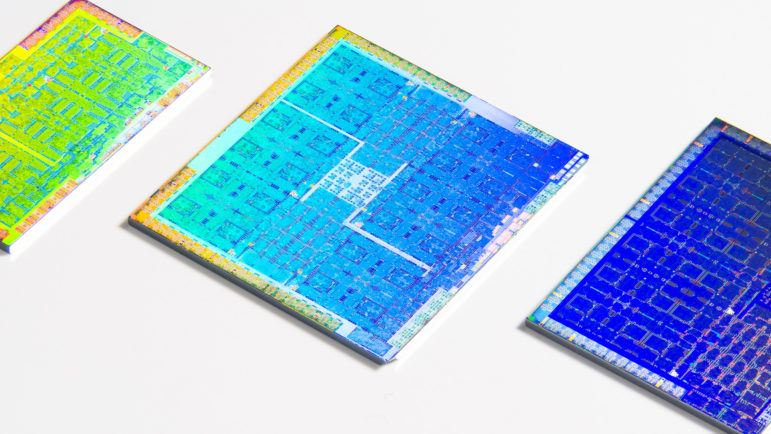

The GPU (graphics processing unit) is an essential part of modern AI infrastructure. It’s a special type of chip or electronic circuit that is used in mobile phones, personal computers, game consoles, workstations, and cloud computing infrastructure. Image: Fritzchens Fritz/Better Images of AI/CC-BY 4.0

10 Things You Should Know About AI in Journalism

Read this article in

The GPU (graphics processing unit) is an essential part of modern AI infrastructure. Image: Fritzchens Fritz/Better Images of AI/Creative Commons

Editor’s Note: Mattia Peretti manages JournalismAI — a research and training project at Polis, the international journalism think tank of the London School of Economics — which helps news organizations use artificial intelligence (AI) more responsibly. At a Media Party innovation conference in Buenos Aires, he shared 10 essential points that reporters should know about AI in journalism — such as how it can help news organizations, why he thinks we should stop calling it AI, and the need to hold accountable the people and systems behind it when it’s used irresponsibly.

Here are 10 things I learned, and 10 things you also should know, about AI in journalism:

1. AI Is Not What You Think

First of all: AI is probably not what you think. We have been biased by science fiction to form in our brains images of robots and dystopian futures where they fight with us for the control of the universe. But the “AI” we are talking about in the context of journalism is more similar to a spreadsheet than to any kind of robot.

With “AI,” we refer to “a collection of ideas, technologies, and techniques that relate to a computer system’s capacity to perform tasks that normally require human intelligence.”

There is an important distinction that you should keep in mind: The AI that currently exists is Artificial Narrow Intelligence. Computer programs that can perform a single task extremely well, even better than us. The AI depicted by science fiction is Artificial General Intelligence, and that’s nothing more than an idea right now. A hope for someone. The idea that machines can be made to think and function as the human mind.

All in all, I may go as far to say that it would be better if we all stopped calling it AI because of the misconceptions that these two letters inevitably create when they come together. Any time you read “AI” — or you are writing “AI” in your articles if you are a journalist — stop for a second to think what other word could replace it in that sentence. Maybe it’s an algorithm, or automation, or a computer program. That little replacement exercise will help your understanding, and your readers too.

And it’s not only about words either. AI as a field is deeply misrepresented in images as well. Because if you type “artificial intelligence” in your search engine, you get something like this:

Humanoid robots, glowing brains, outstretched robot hands, and sometimes the Terminator. These images fuel misconceptions about AI and set unrealistic expectations on its capabilities. Better Images of AI decided to do something about it. It’s a non-profit initiative that is researching the issue and curating a repository of “better” images to represent AI.

2. AI Is Not Stealing Your Job

AI is not stealing your job. Read this out loud: “AI is not stealing my job.” Let that sink in.

The truth is that artificial intelligence is not nearly as intelligent as it would need to be to replace you. It can take away some tasks that we normally do. But it’s us who decide what those tasks are, based on what tools we decide to build with AI.

It’s not a coincidence that, in the context of journalism, AI currently does mostly boring and repetitive tasks that we don’t really enjoy doing anyway. Things like transcribing interviews, sifting through hundreds and hundreds of leaked documents, filtering reader comments comments, writing the same story about companies’ financial earnings, for hundreds of companies, every three months. No one got into journalism because they were looking forward to doing those things again and again.

AI can do many things that can support your work and, as any other technological innovation before, it is changing newsroom roles. But then it’s up to us. We decide what to ask AI to do. By itself it doesn’t have the ambition, nor the ability to steal our jobs anytime soon.

3. You May Not Need AI

My goal working on JournalismAI is not to tell you that you should use AI. The mission of my team is to ask what problem you are trying to solve, and help you figure out if AI can be part of the solution.

Honestly, often it is not. Because you may be able to solve your challenges or achieve your goals with other tools that are easier to use, less expensive, and more secure.

Do not get into the AI space for FOMO, for fear of missing out. Do not give in to all the fancy headlines telling you that AI will revolutionize journalism or even “save it,” because it will not.

Do you want to use AI? Tell me your use case first.

4. You Need A Strategy

Now, let’s say you have done your homework. You analyzed your use case, understood what AI can and cannot do, and you reached the conclusion that indeed using AI could help you. It’s not time to celebrate yet because I have to warn you: Implementing AI can be hard. You need to think strategically.

Sometimes we talk about “implementing AI” as if it was one single process. But the reality is that depending on your use case and the type of technology (AI is an umbrella term with many subfields), that process may be completely different from the one you have to go through for a different use case.

Using a machine learning model to filter the comments you receive from your readers, automatically tagging potentially harmful ones, for example, requires a completely different process than if you want to optimize your paywall to maximize your chances to turn a sporadic reader into a paying subscriber.

Understand your use case and design an implementation strategy that is specific to your use case as well as to the strengths and weaknesses of your organization.

5. Tools: Buy, Build, Or…

“Using AI” means using some technological tools that can help your work as journalists. So the question is, where do you get those tools?

The debate in the industry lies mostly around the dichotomy buy vs build: Do you build AI tools in house to serve your specific purpose or do you instead buy existing tools and adapt them to your needs? It’s a hard choice and it’s not that one option is right and the other is wrong.

Famously, two international news agencies that have been at the forefront of AI innovation have taken different approaches: Reuters builds most of its AI tools in house, while the AP buys tools by working with startups and vendors in the open market.

Making a full list of pros and cons would take too long, but what I want you to understand is that, once again, you have to find your own way. You must consider what you are trying to do, evaluate the costs of both options, consider carefully what skills you already have in your team or what you can easily acquire, and chart a path that works for you.

The good news is that you are not alone. If you decide to build, it’s likely that there are others in the industry who went through that route already and that you can learn from. And if you decide to buy existing tools, there are many available out there and not all of them are as expensive as you may think.

But the secret is that buy vs build is a false dichotomy.

There’s a third route, which is to partner. With startups or academic labs that may have the skills you need, may have done a lot of useful research already, and could be eager to partner with you to apply their theoretical learnings to a practical use case.

A good way to begin this journey is to have a look at the JournalismAI Starter Pack, an interactive source that allows you to explore what AI might do for your journalism.

6. Unicorns Exist

This point is actually two points but they are both about talent.

One of the biggest challenges for news organizations that are getting into AI is finding or acquiring the technical skills they need to implement their AI-related projects. It’s hard because, let’s face it, journalism is a small industry compared to many others that are more advanced in the use of AI. And we don’t have loads of money to throw at computer scientists and engineers either.

Journalism does have something that not all industries can offer, though. We are a mission-driven industry where work is done not just for profit but for the betterment of society. This matters to people. Not to everyone, but to many.

You have to leverage your mission. You won’t convince technical people to work for you by outbidding companies in other industries or by offering other perks. Your mission is what you have to sell. Focus on attracting people who want a job that offers them a purpose and not just profit.

The second part of this point here is that you don’t need to have “AI” in your job title to work on AI projects. Through our fellowship programs and collaborative initiatives, I learned that what AI projects need more than anything are interdisciplinary teams. People from editorial, product, and technical departments working together make the best AI projects.

And you can train to be part of this too. There are lots of free courses online — some more technical, others more strategic — that you can take to learn more about AI, maybe learn to code if that’s what you aspire to, and build up your skills so that you can get a seat at the table. You can be part of that interdisciplinary team working on the next AI project in your organization.

7. Collaboration is Essential

The interdisciplinary teams we just mentioned are one layer of collaboration that your AI projects can benefit from. Same for the idea of partnering with startups and academic labs to help your projects come to life. But there’s more.

Someone in another news organization is facing your same challenges and may be trying to build the exact same thing you have in mind. Maybe they are your direct competitors or maybe they are on the other side of the planet, it doesn’t matter. When it comes to AI, collaboration is your biggest asset. You can decide to do it on your own, but unless you have the resources of the New York Times, it is likely that you’ll be left behind.

For three years now, my team has been helping news organizations from across the world collaborate on AI projects and I can tell you that it’s possible. It’s not easy, it takes effort and trust, but it’s possible and absolutely worth it.

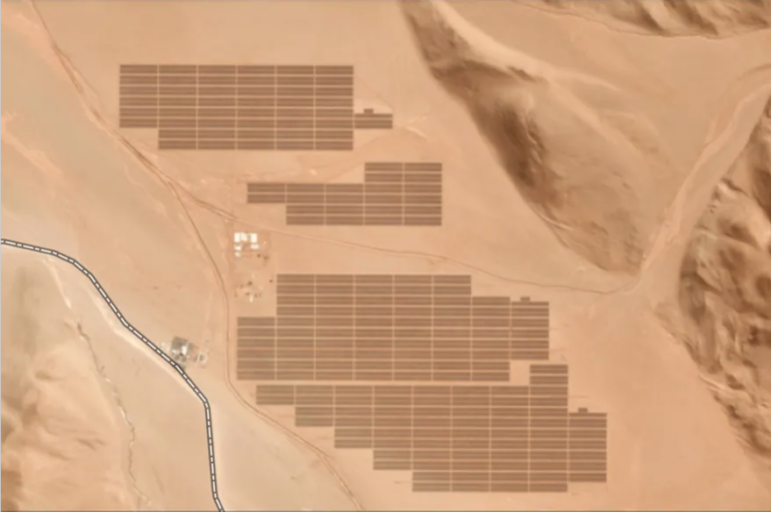

From using computer vision in combination with satellite imagery to power your investigative reporting on environmental issues, to using AI tools to track the impact of influencers on our society, or to detect examples of hate speech directed at journalists and activists, our fellows and participants have been doing incredible work with AI by embracing cross-border collaboration and you should consider it too if you are not already doing it.

8. You Need A Space to Experiment

“There is so much progress in these models, and so quickly, that it’s hard to keep up.” That’s what an expert in our network told me in a recent conversation. And they are right. Things change very quickly in the field of AI.

New tools are released seemingly every other day, new scientific papers are published daily. Trying to keep up with everything is just not realistic. But that shouldn’t be your ambition either. Keep your ears wide open for interesting developments but be skeptical of what you hear and learn to recognize what you should truly pay attention to. You don’t need to test every single fancy tool that it’s released.

You need to design a strategy that is flexible and adaptive in order to navigate such a fast-evolving space. The first step should be to build a space in your organization or join opportunities outside your organization (like the ones we offer) where you can safely experiment with AI without the pressure of immediate delivery.

Your AI team or your AI people must be given a safe space and the time to fail and learn from failure.

9. Understand AI to Report On It

AI is becoming more and more present in every part of society. And often it’s not used in a responsible way, with disastrous consequences on certain segments of population. The world needs journalists to report on AI and hold these systems and the people behind them accountable.

There are more and more journalists doing fantastic work on algorithmic accountability, and initiatives like the AI Accountability Network of the Pulitzer Center are allowing more reporters to build their skills for this critical purpose.

What I learned is that this can be done best once you understand how AI works via direct experience. Teams like the AI and Automation Lab of Bavarian Radio in Germany are positioning themselves as leaders in this field because they have been smart enough to recognize this, creating a team, a dedicated lab, that is responsible for both using AI for product development and for algorithmic accountability reporting.

You can report on AI much better if you know how to use it — and you will be much more responsible in using it if you understand the risks and potential consequences.

10. A Transformational Opportunity

After almost four years working on JournalismAI, I am convinced that we have a transformational opportunity. I’m not thinking of the examples of automation we listed earlier, although I recognize the efficiency they provide.

I’m referring to the opportunity to use AI to gather the insights we need to make our journalism better. Creative and inspiring ideas to use data and AI to better understand what we produce and how we do it.

From using AI to understand, identify, and mitigate existing biases in our newsrooms, to a project in our current fellowship that aims to use NLP (Natural Language Processing) tools to analyze a publication’s content and spot underreported topics to make their coverage more inclusive. And even rethinking the core journalistic product, atomizing the article to create innovative storytelling formats that are more focused on modern user needs.

These are opportunities to use technology to transform our journalism into something that is more diverse, inclusive, and responsible. That’s why I’m excited about AI. Because while it saves journalists some time and makes their work more efficient, it frees up time to use the skills that truly make us humans, like empathy and creativity.

To wrap it up, I invite you to be balanced in approaching AI: Be skeptical, understand the limitations of the technology and its risks, and be careful and responsible once you decide to use it. But while you do all of that, allow yourself to get excited for the opportunities that it opens up.

It’s on us to decide if we want to sit back and wait for AI to impact our journalism in ways we don’t understand, or if we want to own it and use AI to make a positive impact in our societies.

This post was originally published on the London School of Economics’ Polis blog and and is reprinted here with permission.

Additional Resources

AI Journalism Lessons from a 150-Year-Old Argentinian Newspaper

Journalists’ Guide to Using AI and Satellite Imagery for Storytelling

Deepfake Geography: How AI Can Now Falsify Satellite Images

Mattia Peretti is the manager of JournalismAI, a research and training project supported by the Google News Initiative and run by Polis, the journalism think tank of the London School of Economics & Political Science.

Mattia Peretti is the manager of JournalismAI, a research and training project supported by the Google News Initiative and run by Polis, the journalism think tank of the London School of Economics & Political Science.