Data Journalism

How Non-Coding Journalists Can Build Web Scrapers With AI — Examples and Prompts Included

With basic web knowledge, guidance, and examples, even non-coding journalists can build a scraper with large language models.

With basic web knowledge, guidance, and examples, even non-coding journalists can build a scraper with large language models.

Many reporters never notice the “inspect element” option below the “copy” and save-as” functions in the right-click menu on any webpage related to their investigation. But it turns out that this little-used web inspector tool can dig up a wealth of hidden information from a site’s source code, reveal the raw data behind graphics, and download images and videos that supposedly cannot be saved.

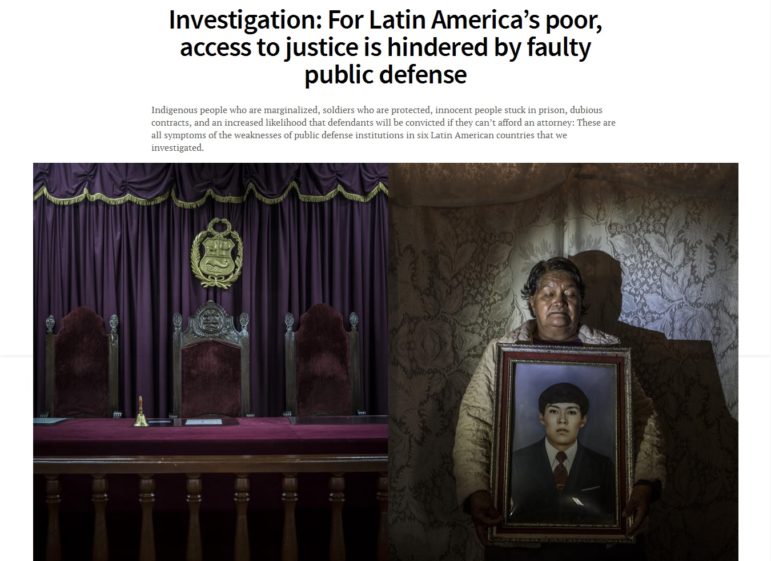

Journalists have used scrapers to collect data that rooted out extremist cops, tracked lobbyists, and uncovered an underground market for adopted children. The Markup recently made the case for web scraping in an amicus brief before the United States Supreme Court that threatens to make the practice illegal. Here’s why they did it.

If you didn’t make it to Seoul for this year’s Uncovering Asia conference — or couldn’t be at two panels at the same time — never fear, tipsheets from our impressive speakers are here! But just in case you can’t decide where to start, here are five presentations that are definitely worth checking out.

Four years of work and 8,000 judicial rulings later, the team at Univision Data shows how in Costa Rica, a person is more likely to be convicted of a crime if they are assigned a public defense attorney than if they have a private one. Their methodology included web scraping, R and logistic regression — a statistical method common in social sciences but practically unexplored in newsrooms.

As 2015 nears an end, we’d like to share our top 12 stories of the year — the stories that you, our dear readers, found most compelling. The list ranges from free data tools and crowdfunding to the secrets of the Wayback Machine. Please join us in taking a look at The Best of GIJN.org this year.

Web scraping is a way to extract information presented on websites. As I explained it in the first installment of this article, web scraping is used by many companies. It’s also a great tool for reporters who know how to code, since more and more public institutions publish their data on their websites.

With web scrapers, which are also called “bots,” it’s possible to gather large amounts of data for stories. But what are the ethical rules that reporters have to follow while web scraping?

$8 billion in just a few hours earlier this year? It was because of a web scraper, a tool companies use—as do many data reporters. A web scraper is simply a computer program that reads the HTML code from webpages, and analyze it. With such a program, or “bot,” it’s possible to extract data and information from websites.