Image: Shutterstock

Chaos and Credibility: A Snapshot of How AI Is Impacting Press Freedom and Investigative Journalism

Read this article in

Since the UN General Assembly first proclaimed World Press Freedom Day 32 years ago, journalistic freedom has experienced a series of seismic shifts, from a proverbial thousand news outlets blooming in the wake of the Cold War and the Arab Spring to a slow ratcheting back of control and independence due to a resurgence of war and authoritarian repression.

Ominously, Reporters Without Borders’ recently released World Press Freedom Index found that the global state of press freedom has reached an unprecedented low point. On average, RSF ranks the worldwide press freedom situation as “difficult” and conditions for journalism are now assessed as “poor” for half of all countries.

Amid this press turmoil, there’s been a corresponding, paradigm-shifting technological development, as artificial intelligence, large language models, and predictive algorithms have appeared all across the media landscape. This potentially breakthrough technology brings both promise and peril with it, however. The smallest of newsrooms can now leverage AI tools for powerful new reporting capabilities, but this technology also threatens the traditional news business model while offering bad-faith actors and political extremists a dangerous new weapon for spreading misinformation and eroding trust in the press.

Understanding how best to cover AI and incorporate it into reporting and editing practices was the driving force behind the Paris Charter on AI and Journalism, of which GIJN was a partner two years ago. The charter’s commission, which included 32 prominent persons from 20 different countries, set out to define 10 key principles for safeguarding the integrity of information and preserving journalism’s social role.

“While AI has added exciting opportunities to investigative journalists’ toolboxes, it also poses various challenges,” GIJN Executive Director Emilia Díaz-Struck said at the Charter’s launch. “GIJN is honored to join the conversations on how AI can help investigative journalism, and how our community can overcome some of the challenges AI poses while reporting on stories that explore broken systems and hold the powerful to account.”

Two years later, on this World Press Freedom Day, the United Nations has likewise put a special emphasis on understanding the ongoing impact of AI in its report: “Reporting in the Brave New World: The Impact of Artificial Intelligence on Press Freedom and the Media.”

“AI is transforming the fundamental right to seek, impart and receive information, as well as the journalistic profession,” the report noted in its executive summary. “It enhances access to information and processing of it, enabling journalists to handle and process vast amounts of data efficiently and create content more effectively.”

“It also presents risks. It can be used to reproduce misinformation, spread disinformation, amplify online hate speech, and enable new forms of censorship,” the report cautioned. “Some actors use AI for mass surveillance of journalists and citizens, creating a chilling effect on freedom of expression. Private platforms increasingly use AI to filter, moderate, and curate content, becoming gatekeepers of information.”

Another more direct concern about AI’s impact on press freedom and journalism involves the technology being touted as a way to supercharge productivity while trimming editorial staff. As the news industry experiences widespread financial difficulties and staff cutbacks, supplanting real reporters and editors with AI could further accelerate job losses among working journalists. In a recent, extreme example, the news site Quartz fired almost all of its editorial staff after being acquired by a new owner, a move seen as the site fully embracing a strategy of AI-generated content. And just last month, the Italian newspaper Il Foglio said it published, as an experiment, the first-ever, completely AI-generated newspaper, which included writing the stories, headlines, and even the quotes (from people who don’t exist).

Investigative journalism, however, stands apart as an antidote to the scourge of AI manipulation and misinformation. Reporting that involves systematic inquiry — multiple sources, data analysis, fact checking — simply can’t be replicated by machine learning or a supercomputer. Watchdog reporting offers readers a vital service that they cannot get elsewhere. And for that reason, protecting the independence of journalists to expose wrongdoing and hold the powerful to account is paramount.

“Members from our community have been able to uncover stories on misinformation, scams, and human rights abuses powered by AI,” GIJN’s Diaz-Struck said on this World Press Freedom Day. “At the same time, as a tool, it has offered new possibilities to investigative journalists to mine millions of records, and identify key leads that have been valuable in the reporting process. AI keeps evolving and we need to hold it accountable, while understanding its possibilities and limitations.”

Exposing AI Threats to Democracy

Though AI continues to struggle with simulating even the most basic aspects of writing a news story, the rise of algorithmically-generated content can still have an insidious effect on both journalism and civil society. This muddying the waters of which sources the public can rely upon ultimately lowers overall trust in the press. As the Reuters Institute reported last November, reporting by professional journalists can get lost in a deluge of SEO-optimized, low-quality information “AI slop” sites. Relatedly, in the lead up to the 2024 US election, NewsGuard notably identified nearly 1,200 “pink slime” sites — which use AI-generated stories to mimic trusted local newspapers or TV stations — to push partisan narratives and undermine legitimate reporting.

“Generative AI enables the creation of misleading content like deepfakes, undermining trust in democratic institutions,” the UN report noted.

GIJN dug into this threat last year as part of its year-long 2024 Elections project. A key story in that series sought to educate reporters around the world on how to identity and investigate AI-generated audio deepfakes, after they had been deployed to mislead and sway voters in countries like Pakistan and Bangladesh — and arguably swung the election to the pro-Russia party in Slovakia. Other news outlets also monitored AI election manipulation around the world as well in 2024, and found instances in Mexico, Indonesia, along with other Asian countries.

A Financial Times investigation last year similarly found that AI-generated voice-cloning tools had been used in the 2024 US Democratic presidential primary (to falsely simulate then-President Joe Biden telling people not to vote), as well as in the 2023 elections in Sudan, Ethiopia, Nigeria, and India.

Hera Rizwan, a reporter for India’s Decode, has published several stories exposing how AI can be used to sow confusion in the country’s electorate. This, as India’s press situation has fallen into an “unofficial state of emergency” in recent years, according to RSF.

“AI is definitely reshaping the ecosystem we operate in,” Rizwan told GIJN. “The worrying part is that it’s putting a lot of power in the hands of big tech platforms and governments — many of whom aren’t exactly champions of press freedom. We’re already seeing AI being used in surveillance tools like facial recognition and predictive policing. In countries where the law doesn’t do much to protect journalists or citizens, this kind of tech can be deeply intimidating. It makes people scared to speak up or take part in protests, and that fear spreads to the press too.”

Outside of elections, AI is also being weaponized to shape public opinion about the war in Ukraine. In coverage from The Insider, reporter Dada Lyndell worked on a series investigating the so-called Matryoshka disinformation campaign, which manufactured fake AI images to paint Ukraine’s leadership as weak and bloodthirsty while encouraging Ukrainians to call for a surrender to Russia.

The Russian-language news site The Insider uncovered an online propaganda campaign that used fake, AI-geneerated images to push pro-Kremlin messaging to Ukrainians. Image: Screenshot, The Insider

Digging Into Algorithms and Their Consequences

Understanding the deeper, systemic implementation of AI — and holding those who employ it accountable for its impact — has also become a key investigative avenue for journalists.

Initiatives like the Pulitzer Center’s AI Accountability Network seek to “address the imbalance on artificial intelligence that exists in the journalism industry and to create a multidisciplinary and collaborative ecosystem that enables journalists to report on this fast-evolving topic with skill, nuance, and impact.”

One recent example of its work was a joint US-Brazil collaboration by Núcleo that uncovered a number of Instagram user profiles pushing rampant AI-generated child abuse content, which parent company Meta’s security protocols were ineffective in stopping. Within days of the report, however, Meta had removed all 14 profiles in response to the exposé.

Al Jazeera, as part of the same Pulitzer Center’s initiative, found that thousands of India’s poor had been denied food and thousands more were mistakenly declared dead based on errors made by an AI algorithm used by the country’s welfare system.

These revelations followed a similar exposé by the Netherlands-based Lighthouse Reports a year earlier. As part of a four-part series in collaboration with the Pulitzer Center, Wired, and several other outlets, Lighthouse Reports dug into the algorithmic bias embedded in that country’s welfare fraud detection software. The resulting investigation, Suspicion Machines, interrogated the risk scoring system’s inputs and design choices and found that the machine learning models routinely discriminated against welfare recipients based on ethnicity, age, gender, and parental status.

In accepting the inaugural Access to Info Impact Award last year, Lighthouse Reports’ reporter Gabriel Geiger explained that press freedom and transparency was key to its success investigating the algorithm from the inside out. “This meant devising and implementing a large-scale freedom of information campaign across European countries with vastly different access to information regimes,” he said. “Government agencies resisted us at every turn. Collaborating with local reporters in each country, we went to court, filed complaints with Ombudsman offices, and waited for over a year to obtain access.”

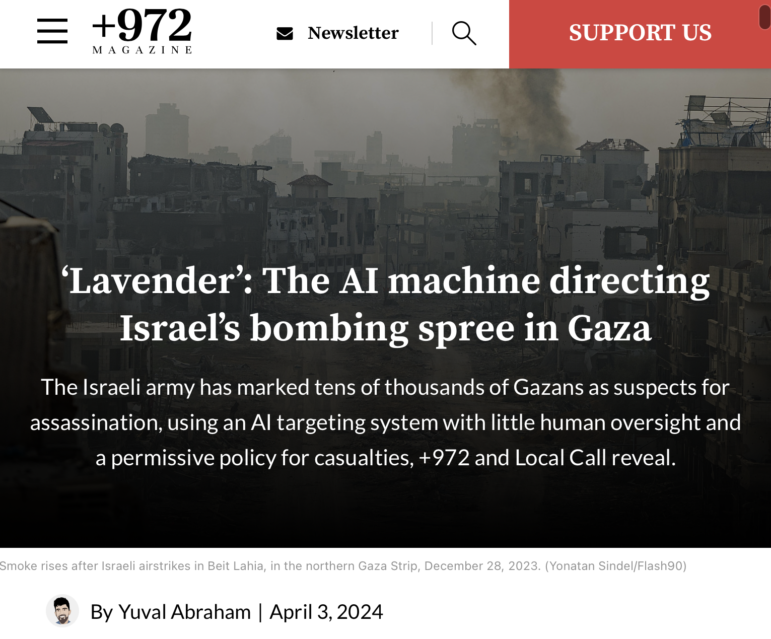

A blockbuster example of investigating AI algorithms was published a year ago, as part of a collaboration between the independent Israeli outlets +972 and Local Call. Together, they revealed how the Israeli Defense Forces (IDF) were using an AI program, “Lavender,” to direct bombing inside Gaza with little oversight and a high threshold for civilian casualties due to its preference for targeting private homes at night.

In a methodology post that followed the initial exposé, +972 reporter Yuval Abraham explained how his team interviewed six whistleblowers as part of the reporting, a reminder that a key element of covering AI implementation involves understanding the humans empowering the technology. Abraham discovered that, from the earliest days of the war following the October 7 Hamas terror attack, the IDF began to delegate life-or-death calls to the AI targeting model.

“According to the sources, the army gave sweeping approval for soldiers to adopt Lavender’s kill lists with little oversight, and to treat the outputs of the AI machine ‘as if it were a human decision,’” Abraham noted. “While the machine was designed to mark ‘low level’ military operatives, it was known to make what were considered identification ‘errors’ in roughly 10% of cases.”

Leveraging AI as a Powerful Newsroom Tool

Despite all these concerns about AI’s role in the media ecosystem and civil society, it can still have a positive role to play in modern-day journalism. In fact, many watchdog reporters have adopted AI, LLMs as force multipliers in their newsrooms. And when deployed smartly with proper editorial controls, AI tools can prove especially useful for small independent and nonprofit media that lack the financial resources and staff of bigger news outlets.

At the very minimum, reporters can use LLMs like ChatGPT to streamline their early reporting process and search for initial storylines to pursue. “ChatGPT gives you a list of go-to experts and their numbers in five seconds, instead of having to spend 40 minutes trawling through Google,” KUSA-TV investigative reporter Jermey Jojola told GIJN last year.

But investigative journalists with more technical know-how can widen the scope of AI’s assistance, giving them more time and resources to focus on higher-level reporting and writing. GIJN spoke with several past graduates of our Digital Threats training course for insights on how they are employing AI in their newsrooms.

“I’ve been developing open-source projects that apply artificial intelligence to support investigative journalism,” Abraji’s Reinaldo Chaves told GIJN. Chaves has created a number of innovative AI newsroom tools and posted them on his GitHub page. “These tools are designed both to streamline the daily workflow of my team — such as investigating disinformation, analyzing documents, and reviewing legal cases with custom chatbots (prompts, configuration, and context for journalism) — and to serve the broader community of journalists through public apps and repositories.”

For a recent Arabi Facts Hub investigation into fake accounts engaging in a social media campaign after the death of Hezbollah leader Hassan Nasrallah, Ibrahim Helal used AI to sift through a trove of online posts and discover their true origin: Saudi Arabia and Iran. “I used AI models to analyze thousands of posts on X (formerly Twitter). These tools helped me detect bot behavior by identifying patterns in timing, repetition, and network activity,” Helal told GIJN. “I also used natural language processing (NLP) to cluster accounts based on how they write and engage, which revealed groups of commercial accounts likely hired to amplify content.”

Others, like India Today’s Balkrishna, see promise in AI’s ability to scour large datasets or files and help with data journalism. “Our team has been regularly using various AI tools in our reporting,” he said to GIJN. “Some of them are: Notebook LM for analyzing large documents and identifying a pattern in them; ChatGPT for extraction, cleaning up and sorting data; Gemini for geolocation, mapping, and extracting location coordinates from a data sheet; AI tools for analyzing deepfakes and manipulated media; and Napkin AI for visualization.” And not only is Balkrishna using AI as a reporting tool, but he is investigating it as well. In January, he co-authored a story that discovered a latent, pro-Chinese bias in the new AI chatbot DeepSeek.

InSight Crime’s Christopher Newton recently looked at four ways Latin American criminal groups have started taking advantage of AI — and he plans to dig even deeper into the technology’s impact on corruption and organized crime in the region. All the while, his newsroom learns about its readers and improves its content thanks to AI.

“We use AI in our news monitoring system to try to rank articles based on relevance and to tag them to make it easier to find info in our internal database,” Newton told GIJN. They also use it to “help make coding more efficient and help us debug when making our own tools or custom graphics. We use AI for transcripts, translation, and copy editing (all with human supervision/review).”

In a sign of things to come, InSight Crime is using AI in various ways as a newsroom tool while also investigating the technology’s deployment by organized crime in Latin America. Image: Screenshot, InSight Crime

GIJN members ICIJ and OCCRP, have also included AI components to their respective in-house developed tools Datashare and Aleph, that have helped mine millions of records and power the work of journalists around the world through cross-border collaborations.

It’s a sign of things to come for the news industry — where AI’s technology can, at various turns, be used both for and against a free press. It will fall to journalists, particularly investigative journalists, to ensure they work together so that the balance tips toward accountability and independence and away from oppression and propaganda.

Or, as the UN report concluded: “Cooperation among relevant actors is essential to develop AI-driven tools for information integrity, detecting and mitigating of disinformation, [and] fostering secure and human rights-based digital ecosystems.”

Reed Richardson is based in the United States. He has a Master’s degree in journalism from the Graduate School of Columbia University, New York, and has worked in digital media for more than 15 years. Before GIJN, he was a freelance features writer and media critic at The Nation, FAIR Media Watch, and Mediaite.

Reed Richardson is based in the United States. He has a Master’s degree in journalism from the Graduate School of Columbia University, New York, and has worked in digital media for more than 15 years. Before GIJN, he was a freelance features writer and media critic at The Nation, FAIR Media Watch, and Mediaite.

Andrea Arzaba is GIJN’s Spanish editor and also serves as director for its Digital Threats project. She holds a master’s degree in Latin American Studies from Georgetown University in Washington D.C. and a BA in Communications and Journalism from Universidad Iberoamericana in Mexico City. Her work has appeared in Palabra, Proceso Magazine, National Geographic Traveler, Animal Politico and 100 Reporters, among other media outlets.

Andrea Arzaba is GIJN’s Spanish editor and also serves as director for its Digital Threats project. She holds a master’s degree in Latin American Studies from Georgetown University in Washington D.C. and a BA in Communications and Journalism from Universidad Iberoamericana in Mexico City. Her work has appeared in Palabra, Proceso Magazine, National Geographic Traveler, Animal Politico and 100 Reporters, among other media outlets.