Image: Lisa Marie David for GIJN

GIJC25 Kicks Off with Global Summit on AI and Tech Reporting

Read this article in

Investigating big tech should not focus on the products and industry claims but rather the remarkably small group of companies and people exerting a growing power over the rest of us. And rather than being dazzled by the purported “magic behind the curtain” of AI, experts recommend that journalists focus instead on human choices and physical impact behind this booming industry.

These were two of the major themes from a high-level, all-day workshop on how journalists should tackle the new tech frontiers, at the kick-off of the 2025 Global Investigative Journalism Conference (GIJC25) in Kuala Lumpur, Malaysia.

Collectively titled The Investigative Agenda for Technology Journalism, this invitation-only series of panel discussions included two dozen speakers, featuring veteran editors, reporters, and forensic investigators from five continents on the technology beat.

A packed conference hall heard that the rapidly moving frontiers of technology have created myriad new opportunities for exploitation and abuse for bad actors across the world, and powerful challenges for journalists seeking to hold them accountable. From deepfake videos and targeting of autonomous weapons to online hate and algorithmic bias, speakers explained how these threats and the digital camouflage they employ require a combination of new skills, traditional journalistic methods, and the help of peers to understand and expose.

The sessions detailed how the stakes for holding technology corporations and their government allies accountable were higher than ever, with authoritarianism growing in concert, and vulnerable communities and the environment suffering direct harms from its spread into the Global South. One leading forensic investigator revealed that a second boom in cyber surveillance of journalists and dissidents — including zero-click spyware that quietly turns your phone into a surveillance tool — was imminent.

To encourage candid discussion, the day’s series of meetings was held under the Chatham House Rule, where the content of the discussion may be disclosed, but where the identity of participants and their comments may not be supplied without their express permission. Those identified in this report provided consent to use their names and quoted remarks.

“In terms of the most important investigative priorities, I think that’s to center power as the lens through which you examine tech, because there is a really, really tiny group of people making extraordinarily profound decisions that will have ripple effects on supply chains; on the environment; communities all around the world,” said Karen Hao, one of the world’s leading AI watchdogs, and the best-selling author of “Empire of AI: Inside the Reckless Race For Total Domination.”

Natalia Viana, co-founder and executive director of Agência Pública, one of Latin America’s largest nonprofit newsrooms, told attendees: “Many journalists think covering big tech is about covering tech — but it should be about investigating the people who make the decisions behind the algorithms and the market bubbles. These are the most powerful companies in history — and their products affect every aspect of our lives and democracies. It’s a huge power imbalance. They are very hierarchical — a handful of mostly men, and their tactics are reproduced everywhere.”

Despite generative AI’s enduring problems with bias and made-up answers, several reporters advocated for the careful, fact-checked use of large language model chatbots to produce useful and sometimes essential leads and pattern-matching at the outset of investigations. Notably, one veteran editor suggested that – because investigative journalism was a “high-skill, low-efficiency craft” with fewer than 10,000 full-time practitioners worldwide – the investigative journalism community should create its own LLMs to dramatically boost efficiency, and ultimately empower citizens to join the accountability fight.

But AI’s unchecked growth and influence is deeply rooted in mistaken public perceptions.

“I try to debunk all the different narratives that come out of Silicon Valley,” said Hao. “I think the central pillar of [big tech] is narrative: the ability to control, and shape the narrative that allows them to continue expanding and gaining unfettered access to resources.”

Other misleading narratives that reporters should challenge include:

- The idea that technology is neutral. In fact, even the most cutting-edge tech is deeply human: the result of human choices, influenced by worldviews, ideology, and self-interest.

- The narrative that technologies such as AI exist purely in some intangible cloud. In fact, they are deeply embedded in the physical world, such as the massive network of energy- and water-intensive data centers, and numerous projects linked to labor and human rights abuses. For instance, another speaker noted that investigative reporters should demand answers from cities such as Cape Town, South Africa, which face acute power and water shortages while also granting permission for new data centers.

- The idea that AI is “an everything machine,” which can tackle any problem, anywhere in the world. In fact, investigative journalists and researchers have already shown that AI models quickly start making mistakes when, for instance, tasks are requested in languages other than English.

Tackling Online Hate

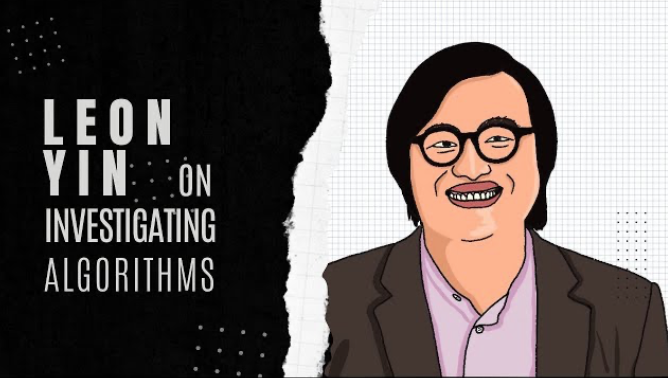

Image: Slide at GIJC25

The targeting and dehumanization of people and groups for who they are is not only on the rise, but, increasingly, is being amplified by populist governments that profit by scapegoating minorities. While news media generally covers the aftermath of online hate, such as public violence, experts recommend more scrutiny of the actual hate campaigns, their origins, and the growing monetization of toxic messaging.

Methods to investigate hate that panelists recommended included:

- Tracking how extremist claims move across platforms — especially Telegram, 4chan, Odysee, X, TikTok, and Facebook — or what reporters on this beat refer to as “cross-platform OSINT mapping.”

- Following how content migrates to other platforms to evade platform bans or moderator action.

- Using tools such as Maltego or Gephi to identify the individuals who spread, generate, or reinforce these narratives.

- Following the money to corporate accountability. Sometimes the people spreading hate earn money directly from the platform they use. Session speakers noted that policymakers are more likely to take regulatory action if grand-scale enablement is demonstrated.

- Conducting content analysis. Develop a system for analyzing memes, videos, and chats within communities.

- Creating your own “codebook” of terms and “dog-whistle” phrases beneath hate categories, such as misogyny, antisemitism, xenophobia, anti-LBGTQ bigotry, and racism. Identify the meme templates and rhetorical tricks that hate disseminators share.

- Study frequency patterns and calls to action.

However, in addition to their accountability role, journalists also have a public service role to play in countering hate as well as not inadvertently amplifying it.

To establish a journalistic corollary to the Hippocratic Oath of “first, do no harm,” panelists urged other reporters to follow these best practices:

- Provide contextual reporting that exposes hate narratives — while starving them of exposure or reach — and helps readers understand coded language and tactics behind this threat.

- Give a voice to targeted communities, to boost awareness and counter dehumanizing narratives.

- “Blur slurs,” and paraphrase hate language.

- Clearly explain radicalizing behavior, such as the use of bots and “sockpuppets.”

Panelists shared chilling cases of tech abuse from their recent investigations: such how the identities of female Ukrainian journalists were stolen and used for AI-generated, Russian propaganda deepfake videos that attracted 24 million views; how the Mexican army secretly surveilled human rights activists; how Israel’s military used a machine learning program to “generate” hundreds of new targets, with lethal consequences for civilians in Gaza.

They also heard about the need for journalists on this beat to be deeply sensitive to less technologically literate audiences.

“Remember to imagine communities where ‘algorithm’ doesn’t even exist in their mother tongue,” an African data journalist pointed out. “Older generations are clicking on all sorts of links, and at the mercy of scamsters without our help. How do we explain phishing to these vulnerable people? Tech systems are entrenching systemic injustice in the Global South.”

Rowan Philp is GIJN’s global reporter and impact editor. A former chief reporter for South Africa’s Sunday Times, he has reported on news, politics, corruption, and conflict from more than two dozen countries around the world, and has also served as an assignments editor for newsrooms in the UK, US, and Africa.

Rowan Philp is GIJN’s global reporter and impact editor. A former chief reporter for South Africa’s Sunday Times, he has reported on news, politics, corruption, and conflict from more than two dozen countries around the world, and has also served as an assignments editor for newsrooms in the UK, US, and Africa.