Free, Game-Changing Data Extraction Tools that Require No Coding Skills

Read this article in

Welcome back to the GIJN Toolbox, in which we survey the latest tips and tools for investigative journalists. In this edition, we’ll explore three free and relatively easy solutions that reporters can use to scrape data from documents. These techniques were presented at the recent 2022 Investigative Reporters & Editors conference (IRE22) and attracted “oohs” and audible ripples of approval from assembled journalists. (We can’t think of a better endorsement than the spontaneous response of watchdog reporters.)

When reporters finally obtain the data they need for their investigations, they are often faced with a second problem: how to select and extract that data, so it can be used and moved around in spreadsheets. For many small newsrooms, manual entry, advanced coding, or costly commercial OCR (optical character recognition) services are not realistic data scraping options.

What’s more, several veteran watchdog journalists at IRE22 noted that they were not only seeing an increase in the amount of public documents released in unstructured or “dead” formats – such as scanned documents or “flat” PDFs – but that some government agencies deliberately use these formats to burden the reporting process.

In a final challenge, many agencies around the world direct reporters to check webpages for their requested data, which requires copying and pasting individual boxes on tables, and manually clicking through numerous tabs or sheets to reach the end of the full dataset.

“I file a ton of public records requests, and I find that it is now exceptionally rare for me to get the document or data I requested in the format I requested,” said Kenny Jacoby, an investigative reporter at USA today, who presented several PDF tools at the conference. “Sometimes it seems like the agency giving you the document intentionally wants to make your life harder – they’ll strip out the text from a PDF, or scan it before they send it, or the data is in some unstructured format with no columns and rows. These impediments can really slow us down, so it’s important to have tools to deal with them.”

Google Pinpoint — and its New Features to Conquer PDFs

In 2020, GIJN was among the first to announce the availability of a new AI-powered document parsing tool from the Google Journalist Studio, now branded as “Pinpoint.” We described the newly-released tool as “a turbo-charged Ctrl-F” function with advanced OCR that could quickly search vast troves of documents and images. In a data session at IRE22, Jacoby said Pinpoint has since evolved into a free, digital master tool with easy access for professional journalists, thanks partly to input to its developers from investigative reporters.

Jacoby showed that Pinpoint’s data features now include these:

-

If you search for a single keyword – say, “faculty” – it will not only find that word throughout your uploaded research file, but will also highlight related words – such as “teacher,” or “campus,” or “professor.” It also finds tense variations for the searched term; supports seven languages, including Portuguese, Spanish, French, and Polish; and can exclude unwanted terms with a minus sign.

-

Upload bundles of scanned or PDF documents – or even pages of handwritten scrawl – and it can rapidly turn them into “live,” searchable, copy-pastable text documents. It even reads words that run in directions other than horizontal.

-

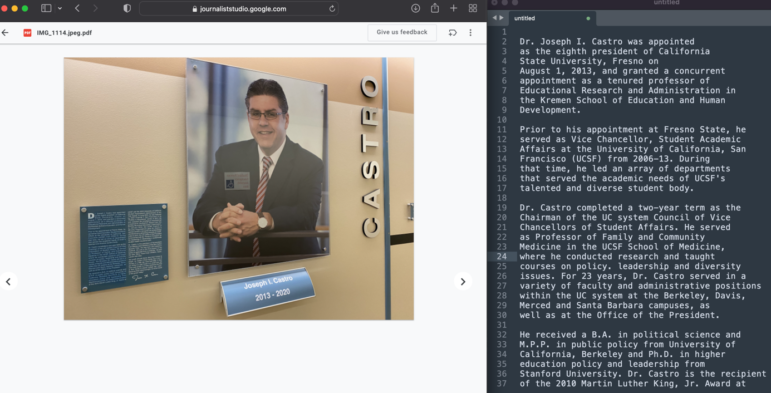

The tool will not only recognize and text-transcribe signage or graffiti in images, but can also reproduce long passages of tiny script it notices on plaques or noticeboards in the background of images. (There was an audible gasp from journalist attendees during the Pinpoint demo when it was able to read and process tiny writing on a dense, angled biographical plaque in one photo. One NBC Telemundo reporter, Valezka Gil, exclaimed: “Oh my gosh! You’ve just changed my life – it will save me so much time.”)

-

Jacoby says its audio and video transcription feature is now so advanced that he uses the free Pinpoint tool instead of dedicated transcription services like Trint or Otter – which have small subscription fees – to create searchable transcriptions of his audio interviews. “This single feature is like those tools, but it’s free,” he said. “One thing it doesn’t do that Trint and Otter do is it doesn’t identify who is speaking, and assign a name to each person — like ‘Speaker 2’. But it does identify logical breaks in conversation, and inflection points in voices. You can just click to a point in the text transcript and it will start playing it at that point.”

Jacoby said free access to Pinpoint’s features is now straightforward, and that extra storage for large projects can be requested from its technicians.

“You do need to get approved to use it, but when me and my wife – also a journalist – signed up, we were approved almost instantly,” he noted. “You may need a work email address, but it’s not hard to get in, and the team there is very responsive.”

The downside? Pinpoint is a wholly online service.

“That means you need an internet connection, and you’re uploading your documents to some server somewhere, and – if, say, Google got subpoenaed – it’s possible your documents could be turned over,” he warned. “Also, it doesn’t allow you to download a copy of the OCR document – it lives on Pinpoint, so you have to copy-paste the text out. But it probably has the best OCR in the business.”

Journalists at IRE22 were amazed to learn that the optical character recognition (OCR) capability of the free, Google Pinpoint tool is powerful enough to read and transcribe text as small as the writing on the blue biographical plaque in this photograph. Image: Kenny Jacoby

ImportHTML/ XML Hack for Data on Websites

As ProPublica’s Craig Silverman recently demonstrated for GIJN: the source code behind any website offers a wealth of digging tools for investigative journalists, and – despite its intimidating look for non-coders – requires no skills beyond “Control-F,” or “Command-F,” to navigate.

At a session on Google Sheets scraping at IRE22, freelance journalist Samantha Sunne demonstrated how this code can also be used to easily grab long tables or specific data items on websites, and – within seconds – populate all of their data in the format you need in a spreadsheet. No need to individually copy and paste hundreds of boxes into your file. The technique involves a formula to instruct Google Sheets — in its first, top-left box — to extract a source code element you need from a web page (the code that builds, say, a data table you liked on the facing page).

In fact, you don’t really need to look at any code at all to extract a well-formatted data table on any site. Just follow these steps:

To import a single data table from a web page – no matter how long it is – simply type the following formula into Google Sheets: =IMPORTHTML(“URL”, “table”) If the data is formatted as a list, try “list” instead of “table” – and if you want, say, the second list on a page, try adding the numeral 2 after a comma and space: =IMPORTHTML(“URL”, “list”, 2)

When GIJN tried this hack to import a table of 564 failed banks from the website of the US Federal Deposit Insurance Corp., the entire process — from copying the FDIC URL to opening Google Sheets and having the entire list of banks perfectly formatted into columns – took less than 15 seconds. However, it’s important to use the exact punctuation needed for the formula, including a comma after the URL, and quotation marks around the two items in parenthesis. Remarkably, any updates to the live website data will also automatically appear in the Google Sheet – so you don’t have to keep checking the page during your investigation – unless you have disabled that update function.

Still, Sunne said it was also important for reporters to become at least somewhat familiar with html elements, to get a sense of how computers package the data we see on facing pages, to make it easier to handle imperfectly formatted information – and to dig much deeper with more advanced formulas.

To find the code that builds any page, simply right-click on any empty or white space on a site, and click “view page source” or “show page source.” In general, the important point to remember, she said, is that any words you see on the human-facing webpage must also appear in the computer source code page, so you can simply “Ctrl-F” to find any data term in that code, look to see what element tags are used to capture it, and experiment with those tags in the formula.

“While useful, the ImportHTML formula can only pull in tables and lists – but there is another formula, ImportXML, that can pull in any html element,” Sunne explained. “It looks very similar – the equals sign; the formula name, the URL – but you can get way more specific.” Here’s how to do it:

To import specific data elements on a web page – like individual table rows, or bold text-only, or headings – try a formula like this (for a data headings example): =IMPORTXML(“URL”, “//h2”) , or this (for table rows): =IMPORTXML(“URL”, “//table/tr”)

There are many commonly-used html elements, like “//h2” (header) and “/tr” (table row) – which reporters can find in html dictionaries – but Sunne recommends that journalists simply take note of the elements surrounding the data they need, and identify key computer jargon tags that can help refine their next data imports. For practice, try using the these two data scraping techniques on large Wikipedia sites, which commonly have several data lists and tables.

Tesseract, with ImageMagick, for Securely Extracting Data Offline

Kenny Jacoby, of USA Today, said an open source OCR engine called Tesseract offers a great data extraction solution for sensitive documents as well as huge data archives, if the quality of the input data is good enough. Remarkably, its latest version also recognizes over 100 languages, as well as right-to-left written text in Hebrew or Arabic.

Tesseract converts images without a text layer to selectable and searchable PDFs, and Jacoby said it is especially powerful in converting large batches of “flat” documents into live, copy-pastable text. He said that generally means that reporters must first convert PDF documents to high resolution images – ideally, using the open source ImageMagick tool – and then feed these into Tesseract to get the scraped data.

“Its OCR is not as good as Pinpoint, but it’s pretty good,” Jacoby said. “But a big benefit is that it’s offline – you can do everything locally, on your terminal, so it’s good for sensitive work. It’s really good for batch conversions; for each of 1,000 documents, you can OCR all of them.”

He added: “You may have to increase the quality of the image or the contrast, but with ImageMagick, you can boost the quality of the image.”

In addition, Jacoby recommended a detailed guide on the Tesseract and ImageMagick tools by Wall Street Journal investigative reporter Chad Day, which can be found here on Github.

While the Tesseract solution does require some “intermediate” coding skills, Jacoby said this can be a one-off scenario, in which a person with command-line skills could set the program up in one visit, and provide a reporter with two short lines of code that they can then plug in for each future data extraction. To extract tables printed in PDF formats, Jacoby recommended the Tabula app – a better-known open-source tool that was created by journalists from OpenNews and ProPublica. “It essentially liberates data tables from PDFs, and dumps them into spreadsheets,” he explained.

Tabula allows reporters to simply draw a box around a table on their computer screens to extract the data they want, and that it can also auto-detect tables – including those without borders. While Tabula requires “live” or OCR’d documents, he said the tool works well with text files created by Tesseract. “It’s also offline, so it’s very private,” he noted.

Additional Resources

Digging Up Hidden Data with the Web Inspector

Why Web Scraping Is Vital to Democracy

Tips for Building a Database for Investigations

Rowan Philp is a reporter for GIJN. He was formerly chief reporter for South Africa’s Sunday Times. As a foreign correspondent, he has reported on news, politics, corruption, and conflict from more than two dozen countries around the world.

Rowan Philp is a reporter for GIJN. He was formerly chief reporter for South Africa’s Sunday Times. As a foreign correspondent, he has reported on news, politics, corruption, and conflict from more than two dozen countries around the world.