Image: Shutterstock

What PolitiFact Learned about Making Money and Earning Trust

Photo: Shutterstock

When journalists practice transparency around their processes, their goals, and their values, news consumers tend to respond positively. Sometimes, they even spend more money on journalism.

That’s the case with an experiment we ran this summer with PolitiFact. We divided the audience of their weekly email newsletter into segments to test two things:

-

- If you use the language around your donation button to highlight a specific value you bring to your audience, are readers more likely to click?

- If you share more behind-the-scenes information about how you operate and what motivates your work, will it influence how people feel about our brand?

In both cases, the answer was yes. Injecting trust-building strategies into PolitiFact’s newsletter for one audience segment increased clicks on the donation button and improved their Net Promoter Score rating within that audience.

We’re going to share details about that here. We’re also able to link to the full report we sent the PolitiFact team. Huge thanks to them for being willing to have us share their data — including newsletter subscription numbers and open rates — publicly, so the industry can learn from it, and so we can be transparent about our Trusting News process.

This project was especially exciting for the Trusting News staff because measurement of trust is complicated, but dollars and brand ratings are pretty simple. There’s not a universal trust metric, so we work a lot in proxies for trust. We look at how audiences respond, what they click on, what they question and how they engage with journalists. We interrogate a lot of qualitative data, and we look for patterns in what seems to be working.

It’s always nice to be able to draw a straighter line between trust strategies and clear signs of success. It seems certain that people are more likely to pay for journalism if they trust it, and they’re more likely to recommend journalism if they trust it. That’s what this experiment looked at.

Details on the donation button test:

When we started this A/B test in May 2019, the newsletters had pretty standard language introducing the donation button. One audience segment, Group A, was our control group. They continued to see this donation invitation:

Group B was the test group. Over 18 weeks, they rotated through one of five messages introducing the donation button:

- Now more than ever, it’s important to sort fact from fiction. Please donate to support our mission.

- No spin, just facts you can trust. Democracy depends on it. Support the truth today.

- Our fact-checks disrupt the agendas of politicians across the ideological spectrum. Support the truth today.

- We follow the facts and share what we learn so you can make your own decisions. Support our mission today.

- Our only agenda is to publish the truth so you can be an informed participant in democracy. We need your help.

By making changes to the language around the donation button, we were primarily looking to see whether the changes motivated more readers to click the button. We were secondarily interested in whether those clicks led to actual donations.

It worked!

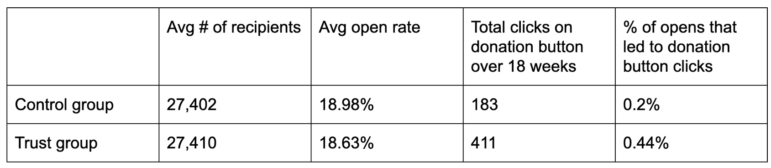

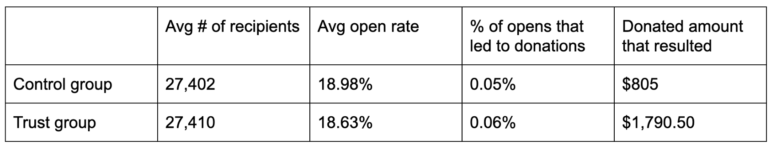

With the donation test, we were primarily interested in how many people from each version clicked the button to donate. Of the total number of times the newsletter was opened, the trust version was more than twice as likely to lead to a click on the donation button.

The trust version also led to an increase in donations, which we were secondarily interested in. The numbers are higher both for the total number of donations and for the amount donated.

Details on the behind-the-scenes language test:

Each week, our test group also saw an extra bullet point with information about PolitiFact’s processes and motivations. The team crafted these based on what they wish all readers knew about their newsroom. Basically, they identified potential road blocks to trust and then were proactive about addressing them. Those messages included:

- When PolitiFact started more than 10 years ago, no one was sure it would work. Fact-checking definitely wasn’t yet a trend. But here we are, more than 16,000 fact-checks and a Pulitzer Prize later, helping to spread fact-checking journalism around the world. Read more about our history here.

- What goes into a fact-check? A carefully selected statement, on-the-record sourcing, and, ultimately, a panel of three judges to rate the statement on a scale from True to Pants on Fire. This system has allowed us to thoughtfully rate claims since 2007. Learn more about our process and rating system.

- We use only use on-the-record (never anonymous) sources and primary documents. We dig deep and don’t rely on secondhand reports. More on our sources.

- Show me the money: About half of our revenue comes from selling content to publishing partners, which include TV stations, other news organizations, and Facebook. Grants and donations are about 15 percent. Advertising makes up the rest of our revenue. Learn more about who pays for PolitiFact.

- We are independent and nonpartisan. We fact-check all parties. We’ve given negative ratings to Republican President Donald Trump. We’ve also given negative ratings to prominent Democrats: Hillary Clinton on her emails. Bernie Sanders on gun sales. Barack Obama on Internet surveillance. This is how we choose claims to check.

- We’re always on the lookout for biases in our own work. We respond to (and publish) reader feedback, and we hope you’ll let us know if you think we slip up.

By adding additional language about the brand’s values, goals, and processes, we were primarily looking to see if opinions about the brand were higher among the trust segment compared to the control segment. We measured this with an audience survey sent in September 2019 to all recipients of the weekly email. It included a basic Net Promoter Score question: “How likely is it that you would recommend PolitiFact to a friend or colleague?”

Note: Readers in the test group had also been seeing the trust language on the donation button. That’s a limitation of the experiment: We were testing two independent factors but were limited to two audience segments. Because of that, the two factors being tested almost certainly influenced both metrics.

It worked!

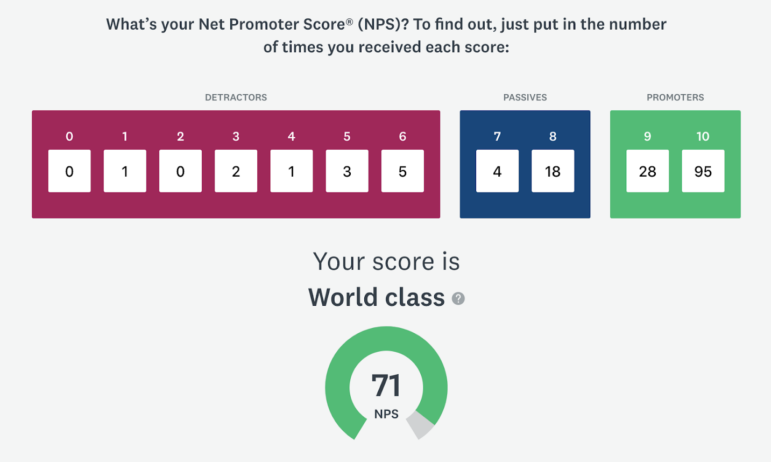

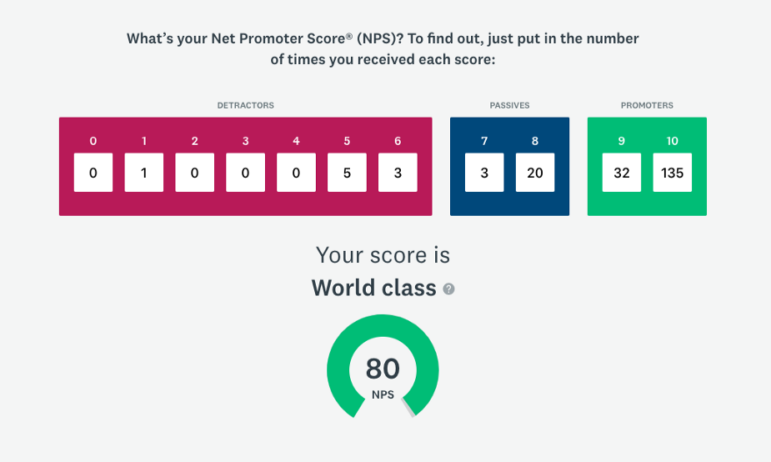

The NPS result for the control group was 71.

The NPS result for the test group, which got the trust-building strategies, was 80.

The survey also asked participants about their political leanings. We paid special attention to people who identified themselves as moderate, somewhat right-leaning, or very right-leaning, as their trust in political fact-checking tends to be lower. Among those responses, the NPS was also higher for people who had been exposed to the trust language. The control segment moderate to conservative participants had an NPS of 70, and the trust segment moderate to conservative participants had an NPS of 76.

To see more specifics about the methodology, check out the full report we sent the PolitiFact team. Thanks to these staffers for their involvement and support:

- Editor Angie Holan, who let us mess with her newsletter

- Executive Director Aaron Sharockman, who approved the sharing of the data and supported the project

- Audience Engagement Editor Josie Hollingsworth, who did most of the real work — crafting the language, setting up the mechanics of the experiment, and tracking the data

What should journalists learn from this?

Every time journalists invite their audiences to interact with them, it’s an opportunity to demonstrate integrity, highlight credibility and build trust. That’s true whether we’re linking to content, engaging on social media, calling a source, or meeting people face to face.

We know that when our audience doesn’t understand something about our ethics, our processes, and our motivations, they don’t generally give us the benefit of the doubt. Instead, they insert their own (often unflattering) assumptions.

Put those two thoughts together, and it means that we should be looking for chances to imagine what people don’t know about us and go ahead and tell them. It’s not complicated, yet we don’t do enough of it.

Can you do one of these three things?

- Build trust with your newsletter. Newsletters are a great place to earn trust because we often feel comfortable there with a looser, more personal style of writing. Plus, the ease of A/B testing makes it fairly simple to see if readers respond differently to two versions of something.

- Add trust language anywhere you ask for money. Whether you’re looking for donations, memberships, or subscriptions, it’s smart to reinforce your values (what you stand for and what motivates your work) and your value (what you offer your community).

- Try a Net Promoter Score survey as a simple tool to measure brand sentiment. Connect it to trust where you can (like in a newsletter, so you can A/B test!). For more on how this tool can help you learn about your audience, see this Lenfest post about the Bangor Daily News.

Let us help.

At Trusting News, we’re only making a difference if we’re useful to the industry. We’re paid to help you earn the trust of your audience and get credit for your work. Our weekly newsletter is short and actionable. We offer free, one-on-one advice. We offer a free Trust 101 class. And in February, we’re offering an almost-free full-day workshop at The Poynter Institute. We’d love to work with you. Send questions to info@TrustingNews.org.

Trusting News is designed to demystify the issue of trust in journalism. We research how people decide what news is credible, then turn that knowledge into actionable strategies for journalists. We’re funded by the Reynolds Journalism Institute, the American Press Institute, Democracy Fund, and the Knight Foundation.

This article first appeared on Trusting News’ Medium site and is reproduced here with permission.

Joy Mayer is an engagement specialist. She is the founder and director of Trusting News. Mayer is also an adjunct professor at the Poynter Institute and community manager for Gather, a platform that supports community-minded journalists and other engagement professionals.

Joy Mayer is an engagement specialist. She is the founder and director of Trusting News. Mayer is also an adjunct professor at the Poynter Institute and community manager for Gather, a platform that supports community-minded journalists and other engagement professionals.