Health and Medicine Guide: Chapter 2

Read this article in

Guide Resource

Investigating Health and Medicine

Chapter Guide Resource

Health and Medicine Guide: Preface

Chapter Guide Resource

Health and Medicine Guide: Introduction

Chapter Guide Resource

Health and Medicine Guide: Chapter 1

Chapter Guide Resource

Health and Medicine Guide: Chapter 2

Chapter Guide Resource

Health and Medicine Guide: Chapter 3

Chapter Guide Resource

Health and Medicine Guide: Chapter 4

Chapter Guide Resource

Health and Medicine Guide: Chapter 5

Chapter Guide Resource

Health and Medicine Guide: Appendix

A Study Is Not Just a Study. Get Your Numbers Straight

Tip 1: Stick to EBM and Use PICO

As we stated in our introduction, using Evidence-Based Medicine (EBM), defined as “the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients,” as a method of investigation can be time-consuming, but highly effective.

EBM methods are a great fit with good muckraking: keep questioning what you hear and read, look for the best available evidence, and independently assess its quality. Sounds familiar, doesn’t it? Some EBM principles will be highly effective in your investigation like applying the Critical Appraisal method and adapting PICO criteria to your journalism.

EBM states that to carry out research of the literature and analyze the risk- benefit ratio, a clinical question can be broken down into four dimensions, or PICO criteria. PICO helps us to see if data is missing or flawed, for instance if an inadequate comparator or an aforementioned surrogate outcome was applied.

Illustration: Re-Check.ch

Tip 2: A Study Is Not Just a Study

“A study has shown” … but what kind of a study was it? As neatly put by Gary Schwitzer in his essential guide Covering Medical Research: “Not all studies are equal. And they shouldn’t be reported as if they were.” Being aware of this will make a big difference to your investigation. If your work is based on weak scientific evidence, you won’t have a strong story, and there’s a good chance some of it will be wrong.

In order to work as an investigative journalist in the area of health and medicine, remember that flaws in scientific methodology often indicate that further digging is needed. The learning curve can be steep, but you can start by checking out the wide range of studies as discussed in Types of Study in Medical Research: Part 3 of a Series on Evaluation of Scientific Publications, a 2009 article in the journal Deutsches Ärzteblatt International.

Understanding the differences among types of studies will help you avoid many mistakes. In short, you have to start by asking two major questions. First, was the study conducted on humans? Or was it conducted on animals or on cells? The research on humans is called clinical research. When it comes to assess the effect of a drug or another health measure, the only truly significant results are those obtained on humans, in short, because humans are so different from mice.

A result on mice may be interesting, but any conclusions about a treatment’s efficacy on humans drawn from animal studies are speculative. Such studies are in the so-called “pre-clinical” development stage of a new drug. One should remember that in the story of medicine there have been many drugs that seemed very promising when tested on animals but had to be pulled when it became clear they were ineffective or even toxic for humans.

If the study was conducted on humans, you then have to ask the second relevant question: Was the trial an experimental (also called interventional) study or an epidemiologic (also called observational) study?

This is essential, as pointed out by HealthNewsReview.org: “Epidemiologic — or observational — studies examine the association between what’s known in epidemiologic jargon as an exposure (a food, something in the environment, or a behavior) and an outcome (often a disease or death). Because of all the other exposures occurring simultaneously in the complex lives of free-living humans that can never be completely accounted for, such studies cannot provide evidence of cause and effect; they can only provide evidence of some relationship (between exposure and outcome) that a stronger design could explore further.”

By doing an experimental study, researchers test if intervention A (e.g., a drug or vaccine) does actually lead to outcome B (e.g., a cure or disease prevention). Among experimental studies, the only design that can demonstrate a cause and effect relationship is the randomized controlled trial (RCT), where the study subjects are assigned at random to the intervention (such as a drug or vaccine) or to a control (such as a placebo or another drug). Randomization makes both groups truly comparable: the only difference between the intervention group and the control group is whether their subjects receive the intervention under study or the control. This experimental setting is the only one that allows us to conclude that the outcome difference between the intervention group and the control group is attributable to the tested drug or vaccine.

“Because observational studies are not randomized, they cannot control for all of the other inevitable, often unmeasurable, exposures or factors that may actually be causing the results,” concludes HealthNewsReview.org. Thus, any “link between cause and effect in observational studies is speculative at best.”

So beware: Observational studies cannot, in any circumstances, lead to a conclusion about the effectiveness of a measure, even when a statistically significant association seems to be established. Only an experimental study involving RCT can establish whether there is a causal relationship between the intervention tested and the observed effect.

Also, consider that observational and retrospective studies are more prone to the potential limits of statistical analysis. Sometimes statistics can be used by researchers or sponsors to tweak the results. So when analyzing numbers, keep in mind what Darrell Huff, best known for his book “How to Lie with Statistics,” said in 1954: “Statistics can pull out of the bag almost anything that may be wanted.” Nobel-winning economist Ronald H. Coase echoed this, saying: “If you torture the data long enough, nature will always confess.”

Multicentric, double-blind RCTs are considered the gold standard in determining the efficacy of an intervention. Their design is superior in controlling the parameters likely to distort the results (so-called confounding factors and bias). Two valuable resources to learn these basics: Students 4 Best Evidence and Types of Clinical Study Designs from Georgia State University.

The illustration below describes best practice in evaluating the strength of the evidence. Note that experts’ opinions are at the bottom of the pyramid.

Illustration: Re-Check.ch

A simpler version can be found here:

Illustration: Re-Check.ch

Be aware that the EBM pyramid concept should be questioned too. Whereas RCTs are the reference standard for studying causal relationships, a meta-analysis of RCTs — a systematic analysis and review of different studies and results — is considered the best source of evidence. Bear in mind, however, that if the studies in the meta-analysis are defective, its results won’t be reliable, either. Furthermore, often meta-analyses (for instance those published by Cochrane) conclude there isn’t enough evidence to answer a research question, which is not what journalists typically want to hear.

Reporting on health also means becoming knowledgeable about many flaws in clinical research. Trials showing a significant “positive” result are published, whereas “negative” studies most often are not. Some types of studies are more subject than others to bias, defined by the Cochrane Handbook as “systematic error, or deviation from the truth.” Another resource is the Catalogue of Bias, a collaborative project mapping all the biases that affect health evidence.

A typical mistake journalists make is to confuse correlation and causation. It is tempting to see a link between two phenomena, but first you must ask if there really is a causal relationship. Mathematician Robert Matthews gives an amusing example of this: he shows a highly statistically significant correlation between stork populations and human birth rates across Europe.

Few are aware of the sometimes fraudulent practices that take place in the field of health research. There are studies based on imaginary patients, or written by ghostwriters. There is a lot of literature on such practices, and it is worth getting familiar with it. See, for instance, Retraction Watch’s Study by Deceased Award-Winning Cancer Researcher Retracted Because Some Patients Were ‘Invented,’ and Ivan Oransky’s examples in his interview with The Irish Times, The Shady Backstreets of Scientific Publishing.

The most common mistake most reporters make is drawing the wrong conclusions from weak scientific evidence. Consider taking this free online course on Epidemiological Research Methods from the Eberly College of Science at Penn State University.

Tip 3: Absolute Values and Natural Frequencies

Not everyone is a crack statistician. However, to investigate health the numbers are key. Don’t be afraid to Test Your Risk Literacy (English, German, French, Dutch, and Spanish) and keep in mind that when a new drug or a new health policy or regulation is launched and/or promoted, the focus is on its benefits. Unfortunately, it’s not enough simply to add risks to the picture.

It’s necessary to understand the relationship between benefits and risks. This isn’t easy. Our ability to reason is governed by so-called judgmental heuristics or cognitive shortcuts, resulting in limited rationality. Because of these well-studied phenomena, we tend to struggle with probabilities, especially percentages.

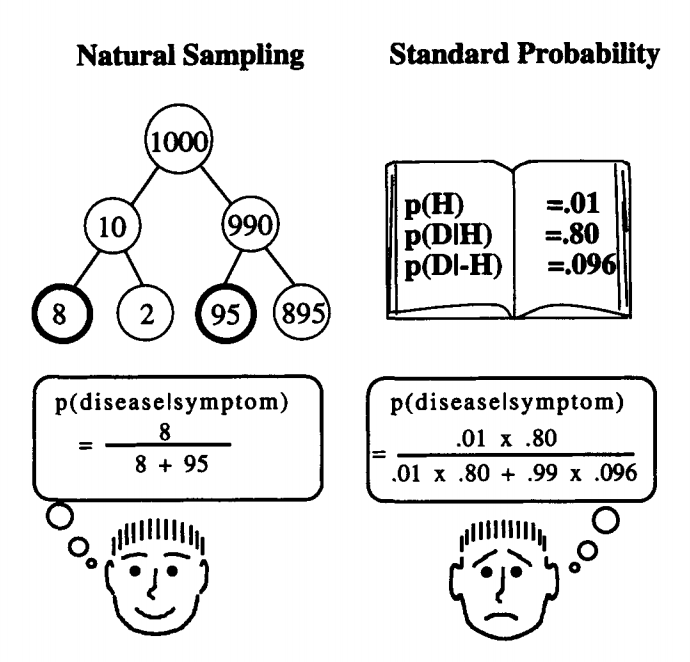

Confused? Have a look at the illustration below. Same numbers. Which one is easier to grab?

Illustration: Gerd Gigerenzer, Ulrich Hoffrage. How to Improve Bayesian Reasoning Without Instruction: Frequency Formats. Psychological Review, 1995, VoTl02, No. 4,684-704

Advocates of a particular outcome may present the information they want to emphasize as a percentage, while communicating the information they want to make less prominent in absolute numbers. So, pay attention to the way the data is presented. Absolute numbers are more clearly representative than percentages.

The following example by Gerd Gigerenzer and co-authors from the Harding Center for Risk Literacy starkly shows how misleading this can be:

“In 1996 a review of mammography screening reported in its abstract a 24% reduction of breast cancer mortality; a review in 2002 claimed a 21% reduction. Accordingly, health pamphlets, websites, and invitations broadcast a 20% (or 25%) benefit. Did the public know that this impressive number corresponds to a reduction from about five to four in every 1,000 women, that is, 0.1%? The answer is, no. In a representative quota sample in nine European countries, 92% of about 5,000 women overestimated the benefit 10-fold, 100-fold, and more, or they did not know. For example, 27% of women in the United Kingdom believed that out of every 1,000 women who were screened, 200 fewer would die of breast cancer. But it is not only patients who are misled. When asked what the ‘25% mortality reduction from breast cancer’ means, 31% of 150 gynecologists answered that for every 1000 women who were screened, 25 or 250 fewer would die.”

The percentage exposure (relative risk) is often more spectacular, therefore more convincing or favorable from the point of view of companies and promoters of a public health campaign, than the exposure in absolute values (absolute risk). Read the HealthNewsReview.org feature on why this matters and check the simple example they propose in this illustration.

It sounds complex but the example below should help.

Illustration: HealthNewsReview.org — Your Health News Watchdog

In your reporting it’s vital that all numbers are expressed in the same way, that is, in either percentages or absolute numbers. This is the only way the risks, benefits, and alternatives (for instance: do nothing) can be understood properly. Also, consider using absolute numbers in your reporting because more people will be able to understand them.

The Harding Center for Risk Literacy fact boxes and icon arrays on breast and prostate cancer screening are examples of best practice: benefits and harms are expressed in absolute values and immediately comparable; data is RCTs meta-analyses.

Illustration: Harding Center for Risk Literacy

Illustration: Harding Center for Risk Literacy

Tip 4: Be Aware of Flaws. And Read the Paper

“A groundbreaking study published by prestigious journal X . . .” You definitely want to avoid putting this line in your story. Biomedical journals are affected by so many issues that even the most prestigious journals have to be scrutinized and cannot per se be considered reliable.

A quick way to understand this is to watch a recording of a seminar at the Liverpool School of Tropical Medicine by British Medical Journal Editor in Chief Fiona Godlee: Why You Shouldn’t Believe What You Read in Medical Journals. Godlee discusses the flaws of the peer-review system. She also is frank about how the business model of scientific journals, and particularly advertising, affects their content. Journals also rely on “reprints,” bulk printed copies of published studies that are paid for by the industry and used for marketing purposes.

There is plenty of literature about commercial influence on the content of medical journals. Also, it’s important to be aware that researchers’ careers are determined by well-studied phenomena like “publish or perish” and by what is called the “impact factor” (how widely cited a journal is). Scientists also have to attract funding to their institutions, which can create conflicts of interests that have nothing to do with science or with the common good. A must-read is an evergreen paper by John P. A. Ioannidis: Why Most Published Research Findings Are False.

Unfortunately there are few mechanisms in place to address these issues. One of them is retraction — a study is withdrawn from publication when major flaws are exposed. However, this rarely happens. Check Retraction Watch, a great resource to find stories.

So how do we manage this complexity as journalists? One shortcut is to search journals which are truly independent from the pharmaceutical industry. There are many worldwide, all members of the International Society of Drug Bulletins.

It is even better to seek out the best available evidence yourself, much as you would do as a journalist in any other field. Which means: search, search, search. Also don’t just read the study’s abstract. Always read the full text, regardless of the journal, the author, or what an expert told you. But of course this is demanding work that will require time, patience, and having already acquired more than some basic knowledge in relevant methodologies and critical appraisal strategies.

It is even better to seek out the best available evidence yourself, much as you would do as a journalist in any other field. Which means: search, search, search. Also don’t just read the study’s abstract. Always read the full text, regardless of the journal, the author, or what an expert told you. But of course this is demanding work that will require time, patience, and having already acquired more than some basic knowledge in relevant methodologies and critical appraisal strategies.

Where do you find scientific studies? PubMed, from the US National Library of Medicine (NLM), is a free, searchable database of more than 30 million citations and abstracts of biomedical literature. MEDLINE, also from NLM, is a bibliographic database with more than 26 million references to journal articles in life science, with a concentration on biomedicine. However, many studies are not open access, which puts barriers in the way of those who wish to research and investigate. Most scientists work for publicly funded institutions, and journals don’t pay the researchers when they publish their studies. Yet the journals charge the same institutions with expensive subscriptions. Read more in this EBMLive article: Research without Journals.

Paywalled papers might sometimes be available on the internet, or you can email the authors, or their institution, asking for a review copy. Such strategies may not be enough; if you want to dig deeper, as you’ll need to retrieve and read many studies. If you can count on a generous budget, of course you can simply buy yourself access to the studies you want to read. On PubMed, you’ll get a link to access the publisher page where payments can be made.

As most scientists and investigative medical reporters can’t possibly pay for the large number of studies normally needed to research an issue, the portal by Kazakhstani scientist and computer programmer Alexandra Elbakyan is here to help: Sci-Hub: To Remove all Barriers in the Way of Science. Elbakyan’s website provides access to a huge number of scientific studies which are normally behind paywalls. On her personal website she describes herself and her project; see also the feature on her in the journal Science. Lawsuits by publishers force Elbakyan to continuously migrate Sci-Hub to different domains, which are regularly posted on Twitter.

Screenshot