Investigating Uber Surge Pricing: A Data Journalism Case Study

My introductory project to computational journalism was to investigate Uber surge pricing in Washington, D.C. The resulting story published in the Washington Post’s Wonkblog ended up being about race, but it didn’t start out that way.

My introductory project to computational journalism was to investigate Uber surge pricing in Washington, D.C. The resulting story published in the Washington Post’s Wonkblog ended up being about race, but it didn’t start out that way.

Nick Diakopoulos, who leads the University of Maryland’s Computational Journalism Lab, wrote for the Wonkblog last year with a story on how surge pricing motivates Uber drivers to move to those surging areas, but does not increase the number of drivers on the road as Uber claims. So if drivers are moving toward surge neighborhoods, those neighborhoods experience shorter wait times, and therefore better service. On the other hand, non-surge neighborhoods will experience longer wait times due to there being fewer cars in the area. So we asked: Does surge pricing—and therefore service—differ consistently based on neighborhood (census tract)? And what are the demographics characterizing those neighborhoods?

Geographic Data

I used Uber API code written by Nick to obtain the expected wait time—or ETA—of any Uber car and the surge price at any specified location. But the locations—given as longitudes and latitudes—have to be selected and given to the API. How should we sample D.C?

Geographic tract data includes latitudes and longitudes for the center-points of each tract, but tract areas range from ~0.08 to ~2.5 square miles. So, with that in mind, I applied a grid of points overD.C. where I handpicked the starting and ending latitude/longitude and filled the rest in programmatically using Python at a density that should hit the smallest tracts. The code also excluded any points that were outside of the District. Once collected, I would then average the data together from all points within each tract to obtain one value per tract.

Looking at the resulting grid on a map, I realized that there were many points in parkland, rivers, cemeteries and other places that contained no actual roads. People are unlikely to be calling an Uber from those places. I had to find a way to validate the addresses. Fortunately, I was able to also do this in python—Googling “the thing you want to do Python 3” got me pretty much everything I needed for this project.

My method consisted of using a Python package called address which parses an address into its components such as building number, street prefix, street name etc. I required that my addresses must have a number and a street prefix (“street,” “avenue,” “road” etc) in order to be considered “valid.” It’s quick and dirty, but effective! Of course, once validated, and all offending non-addresses removed, the next question is: Do I have all tracts represented, or do I now have tracts containing no points?

I wrote another function to check each point against my geographical census data, and then used the center-points for each tract that was currently not represented. These tracts tended to be the tiny ones within the center of the District. After validating those addresses, I then had to manually go in and reposition points in the remaining tracts where the center-points were not valid addresses.

Latitudes/longitudes plotted in HamsterMap to assess locations from which to sample Uber data (surge price multiplier and expected wait times for a car). You can see a subtle grid-like structure created in the initial step, and the random-seeming dots are from using center latitude/longitude points within tracts too small for the initial grid to sample, and in tracts where a dot was removed due to having an invalid address.

Latitudes/longitudes plotted in HamsterMap to assess locations from which to sample Uber data (surge price multiplier and expected wait times for a car). You can see a subtle grid-like structure created in the initial step, and the random-seeming dots are from using center latitude/longitude points within tracts too small for the initial grid to sample, and in tracts where a dot was removed due to having an invalid address.

Human Data

We scoured the 2014 American Community Survey using the excellent interactive visualizations at Census Reporter and our own exploratory analysis, for metrics that may be relevant when considering how demographics may affect or be affected by surge pricing. We settled on a shortlist of three—poverty, race/ethnicity, and median household income—which we downloaded directly from Census Reporter. Whether you’re interested in income/poverty or race/ethnicity, you need to have both since race and wealth tend to covary. One particular oddity was even though Census Reporter visualized median household income, the so-called raw data provided had no such column, and no relevant data from which median income could be calculated. Instead, I obtained it from American Fact Finder, which has a slightly more cumbersome process.

Due to the analysis model we used, we needed to dichotomise race/ethnicity. We sought advice from demographers as to how to do this most correctly, and eventually settled on White (including Hispanic White), and people of color, including Black/African American, Asian, Hispanic Black/African American, and Hispanic Asian.

Other Data-Selection Adjustments

At this point, we also limited the type of Uber car to uberX. This was a practical decision made to reduce our story space, as there were some Uber products that had very different surge pricing patterns, and including these would convolute the narrative we were telling.

We later added census tract land area from the geographic data as a way to normalize population density. While Nick and I were on a call with Uber representatives for comment, the Uber engineer asked us if we had taken population density into account. We had not at that point. Controlling for population density could potentially result in our findings being no longer significant. I did not want to leave Uber with any room for doubting our statistics in their comment, so while Nick kept them on the phone, I hastily calculated the density from the population and land area fields in the geographic data, re-ran the model, and confirmed that controlling for population density did not alter our story (phew!).

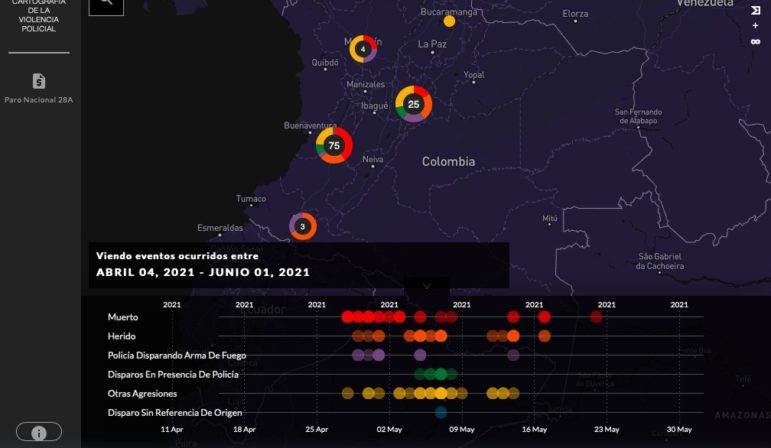

UberX wait times and surge pricing in D.C., shown on Wonkblog.

UberX wait times and surge pricing in D.C., shown on Wonkblog.

Open Sourcing

The Tow Center explores how technology is changing how journalists work and how people judge and consume news. Our particular grant from the Tow Center focuses on methods or standards for open sourcing and providing transparency of computational and data journalism investigations. So what does this mean, in a practical sense? Should I make available only my analysis code, or also code used for data collection? Should I also share the data itself? What is the goal of open sourcing? Is it transparency, validation, enabling others to find their own unique stories?

Reproducibility requires the code/software and data be made available so someone else could rerun the exact same analysis on the exact same data. Replicability, on the other hand, requires achieving the same outcome with independent data collection, code and analysis. If the same outcome can be achieved with a different sample, experimenters and software, then it is more likely to be true. In my case—and perhaps in data stories across journalism—replicability may not apply due to unique social-economic, demographic, government or other relationships relevant to the story. For example, we found that wait times for an UberX in neighborhoods with more people of color are longer: if someone carries out similar analysis on a sample of data collected in, say, Warwick, Rhode Island (90% white, 5% poverty, lacking public transport), getting a different result would not make our report in D.C. to be less true.

With that in mind, I uploaded a compressed CSV of the raw API data to Google Drive for anyone to access, and made all the code for both determining the locations across DC (small tweaks should enable you to get location data for any city), and data analysis available on GitHub. The repository has been forked four times, and within a week of making it available, a temporal analysis was published using our data!

Next

One thing we were unable to do in this study was to disassociate effects of supply and demand. TheAPI only provides “expected wait time”—or ETA—for a car if requested, and the surge price multiplier. Surge pricing is triggered once a certain number of riders in a given location open the app. Therefore, longer ETAs in underserved neighborhoods could be due to lack of demand (few people opening the app) leading to less frequent surge pricing and therefore less incentive for drivers to go there.

It’s also possible that longer ETAs reduces demand itself. If potential riders open the app and see long wait times, they may be less likely to use the app in the future. One way to assess demand might be to look at taxi data across D.C. Unfortunately, while census data does include information on transport, it only relates to transport used to get to work. Further, taxi usage is lumped in with motorcycle and “other.” Instead, I have FOIA’d taxi data from the D.C. Taxi Commission, which has been most helpful.

We were also unable to provide evidence for why there is a lack of demand or supply to underserved areas. This question of “why” is a crucial element that is difficult to get at in algorithmic accountability stories. To try to answer “why,” we want to explore annual crime rates, which could influence where drivers go on or offline based on their perception of risk in different neighborhoods, and banking status. Why banking status? Not having a bank account creates a barrier of entry to the use of Uber, Lyft, and some taxi services, that rely on digital or credit card payments. According to a 2013 study, the unbanked rate in D.C. is 14.5% percent, meaning that almost 15% of D.C. residents do not have bank accounts.

Uber told us that they are “working hard to address a transportation status quo that has been unequal for a long time, making it easier and more affordable for everyone to get around their cities.” But how effective can they be if they fail to reach 15% of a population?

Our data and code is all available, and will be added to and improved as we delve into this project further. Perhaps other investigators will find more stories in our data, or create their own using our code. We’re always looking for advice for how we can improve this process, too, so feel free to contact me (starkja@umd.edu or @_JAStark).

This story originally appeared on Source and is reprinted with permission.

Jennifer A. Stark, Ph.D., is a computational journalist examining algorithmic accountability and transparency. She currently works as a Tow Fellow and research scientist in the Computational Journalism Lab at the University of Maryland’s Merrill College of Journalism. @_JAStark

Jennifer A. Stark, Ph.D., is a computational journalist examining algorithmic accountability and transparency. She currently works as a Tow Fellow and research scientist in the Computational Journalism Lab at the University of Maryland’s Merrill College of Journalism. @_JAStark