On the Ethics of Web Scraping and Data Journalism

Read this article in

Web scraping is a way to extract information presented on websites. As I explained it in the first instalment of this article, web scraping is used by many companies.

It’s also a great tool for reporters who know how to code, since more and more public institutions publish their data on their websites.

With web scrapers, which are also called “bots,” it’s possible to gather large amounts of data for stories. For example, I created one to compare the alcohol prices between Quebec and Ontario.

My colleague, Florent Daudens, who works for Radio-Canada, also used a web scraper to compare the rent prices in several neighbourhoods in Montreal with ads from Kijiji.

But what are the ethical rules that reporters have to follow while web scraping?

These rules are particularly important since, for non-geek people, web scraping looks like hacking.

Unfortunately the Code of Ethics of the Fédération professionnelle des journalistes, nor the ethical guidelines of the Canadian Association of Journalists, give a clear answer to this question.

So I asked a few data reporter colleagues, and looked for some answers myself.

Public Data, or Not?

This is the first consensus from data reporters: if an institution publishes data on its website, this data should automatically be public.

Cédric Sam works for the South China Morning Post, in Hong Kong. He also worked for La Presse and Radio-Canada. “I do web scraping almost every day,” he says.

For him, bots have as much responsibility as their human creators. “Whether it’s a human who copies and pastes the data, or a human who codes a computer program to do it, it’s the same. It’s like hiring 1000 people that would work for you. It’s the same result.”

However, government’s servers also host personal information about citizens. “Most of this data is hidden because it would otherwise violate privacy laws,” says William Wolfe-Wylie, a developer for CBC and journalism teacher at Centennial College and the Munk School at University of Toronto.

Here is the very important limit between web scraping and hacking: the respect of the law.

Reporters should not pry into protected data. If a regular user can’t access it, journalists shouldn’t try to get it. “It’s very important that reporters acknowledge these legal barriers, which are legitimate ones, and respect them,” says William Wolfe-Wylie.

Roberto Rocha, who was until recently a data reporter for the Montreal Gazette, adds that journalists should always read the user terms and conditions of use to avoid any trouble.

Another important detail to verify: the robots.txt file, which can be found at the root of the website and which states what is allowed to be scraped or not. For example, here is the file for the Royal Bank of Canada: http://www.rbcbanqueroyale.com/robots.txt

Identify Yourself, or Not?

When you are a reporter and you want to ask someone questions, the first thing to do is to present yourself and the story you are working on.

But what should you do when it’s a bot that is sending queries to a server or a database? Should the same rule apply?

For Glen McGregor, national affairs reporter for the Ottawa Citizen, the answer is yes. “In the http headers, I put my name, my phone number and a note saying: ‘I am a reporter extracting data from this webpage. If you have any problem or concern, call me.’

“So, if the web administrator suddenly sees a huge amount of hits on his website, freaks out and thinks he’s under attack, he can check who’s doing it. He will see my note and my phone number. I think it’s an important ethical thing to do.”

Jean-Hugues Roy, a journalism professor at the Université du Québec à Montréal and himself a web scraper coder, agrees.

But everybody is not on the same page. Philippe Gohier, web editor-in-chief at L’Actualité, does everything he can to not be identified.

“Sometimes, I use proxys,” he says. “I change my IP address and I change my headers too, to make it look like a real human instead of a bot. I try to respect the rules, but I also try to be undetectable.”

To not identify yourself when you are extracting data from a website could be compared, in some ways, to doing interviews with a hidden mic or camera. The Code of Ethics from the FPJQ states some rules regarding this.

4 a) Undercover procedures

In certain cases, journalists are justified in obtaining the information they seek through undercover means: false identities, hidden microphones and cameras, imprecise information about the objectives of their news reports, spying, infiltrating…

These methods must always be the exception to the rule. Journalists use them when:

* the information sought is of definite public interest; for example, in cases where socially reprehensible actions must be exposed;

* the information cannot be obtained or verified by other means, or other means have already been used unsuccessfully;

* the public gain is greater than any inconvenience to individuals.

The public must be informed of the methods used.

Best practice would generally be to identify yourself in your code, even if it’s a bot that does all the work. However, if there’s a possibility that the targeted institution would change the availability of the data because a reporter tries to gather it, you should make yourself more discreet.

And for those who are afraid to be blocked if you identify as a reporter, don’t worry; it’s quite easy to change your IP address.

For some reporters, best practise is also to ask for the data before scraping it. For them, it’s only after a refusal that web scraping should be an option.

This interesting point has an advantage: if the institution answers quickly and gives you the raw data, it will save you time.

Publish Your Code, or Not?

Transparency is another very important aspect of journalism. Without it, the public wouldn’t trust the reporters’ work. From the FPJQ Code of Ethics:

The vast majority of data reporters publish the data they used for their stories. This act of transparency shows that their reports are based on real facts that the public can check if it wants to. But what about their code?

An error in a web scraper script can completely skew the analysis of the data obtained. So should the code be public as well?

For open-source software, to reveal the code is a must. The main reason is to allow others to improve the software, but also to give confidence to the users who can check what the software is doing in detail.

However, for coder-reporters, to reveal or not to reveal is a difficult choice.

“In some ways, we are businesses,” said Sam. I think that if you have a competitive edge and if you can continue to find stories with it, you should keep it to yourself. You can’t reveal everything all the time.”

For Roberto Rocha, the code shouldn’t be published.

However, Rocha has a GitHub account where he publishes some of his scripts, as Chad Skelton, Jean-Hugues Roy and Philippe Gohier do.

“I really think that the tide lifts all boats,” said Gohier. “The more we share scripts and technology, the more it will help everybody. I’m not doing anything that someone can’t do with some effort. I am not reshaping the world.”

Jean-Hugues Roy agreed, and added that journalists should allow others to replicate their work, like scientists do by publishing their methodology.

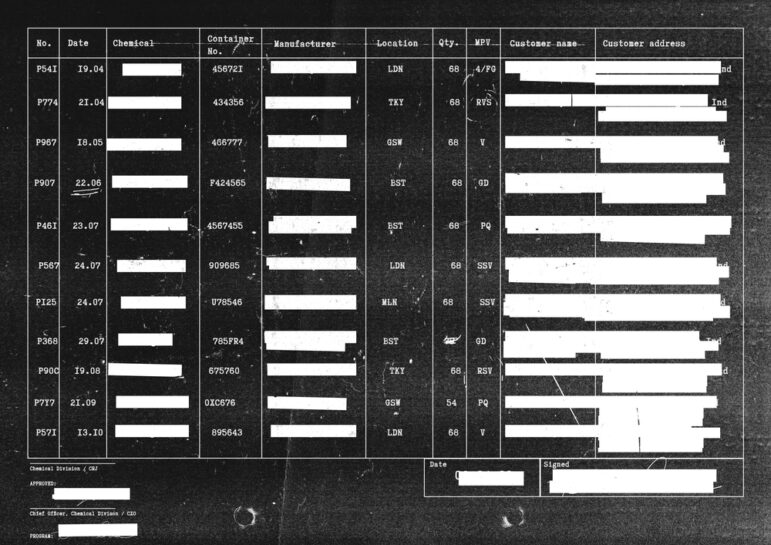

Nonetheless, the professor specifies that there’re exceptions. Roy is currently working on a bot that would extract data from SEDAR, where documents from the Canadian publicly traded companies are published.

“I usually publish my code, but this one, I don’t know. It’s complicated and I put a lot of time into it.”

On an another hand, Glen McGregor doesn’t publish his scripts, but sends them if someone asks for them.

When a reporter has a source, he will do everything in his power to protect it. The reporter will do so to earn the confidence of his source, who will hopefully give him more sensitive information. But the reporter also does this to keep his source to himself.

So, in the end, a web scraper could be viewed as the bot version of a source. Another question to consider is whether reporters’ bots be patented in the future.

Who knows? Perhaps one day a reporter will refuse to reveal his code the same way Daniel Leblanc refused to reveal the identity of his source called “Ma Chouette.”

After all, these days, bots are starting to look more and more like humans.

Note: This is more a technical detail than an ethical dilemma, but to respect the web infrastructure is, of course, another golden rule of web scraping. Always leave several seconds between your requests, and don’t overload servers.

This post originally appeared on J-Source.CA and is reprinted with permission

Nael Shiab is an MA graduate of the University of King’s College digital journalism program. He has worked as a video reporter for Radio-Canada and is currently a data reporter for Transcontinental. @NaelShiab

Nael Shiab is an MA graduate of the University of King’s College digital journalism program. He has worked as a video reporter for Radio-Canada and is currently a data reporter for Transcontinental. @NaelShiab

.